Retrieval Augumented Generation (RAG) - A beginner's guide

March 1st, 2024

Introduction

Large Language Models (LLMs) have undeniably revolutionized our interaction with technology, particularly in question answering tasks. However, they are bound by the information they were trained on and may struggle with events occurring after their training. This limitation often leads to either inaccurate answers or refusal to respond. Retrieval Augmented Generation (RAG) technology addresses this gap by enabling LLMs to access external knowledge, thereby enhancing their capabilities.

What is Retrieval Augmented Generation (RAG)?

Retrieval Augmented Generation (RAG) represents a recent advancement in AI technology. It empowers foundational models to incorporate external information inputs, including the most up-to-date data, for more precise outputs.

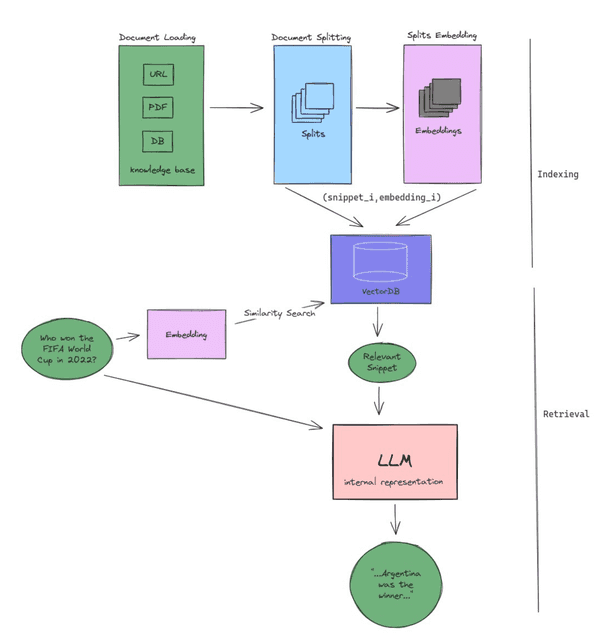

RAG achieves this by augmenting the internal representation of LLMs with relevant external knowledge through prompts. Implementing RAG involves two key components: indexing and retrieval. During indexing, a vector database containing the knowledge base is constructed, comprising embeddings and corresponding snippets. In the retrieval phase, the LLM utilizes this vector database by embedding the user's question, performing vector similarity comparison, and retrieving relevant data. Subsequently, the retrieved data along with the user's question are fed into the LLM to generate a more accurate answer.

It's worth noting that external data undergoes segmentation and embedding into vectors to facilitate similarity comparisons. This process leverages cosine similarity for vector comparison, enabling the identification of relevant data based on aligned vectors.

Analogously, consider the open book exam scenario: the user's question resembles the exam, while the vector database acts as a resource akin to a book that can be referenced to provide precise answers during the exam.

Why and When to use RAG Technology?

The adoption of RAG technology is driven by specific challenges it addresses and the opportunities it offers in AI applications:

-

Enhanced Learning Methods: RAG provides alternative approaches for teaching Large Language Models compared to traditional fine-tuning methods. While fine-tuning is effective, it can be costly and challenging to incorporate the latest information. RAG offers a more cost-effective solution that doesn't require extensive retraining. This aspect is particularly beneficial when the LLM application necessitates access to specific data not present in its training set.

-

Improved Adaptability: RAG becomes invaluable when an LLM requires access to real-time or recent data updates. Traditional training methods often struggle to keep up with the pace of information evolution. RAG mitigates this challenge by enabling the integration of external data during the generation process, ensuring the LLM can provide accurate responses based on the most current information available.

-

Enhanced Observability: Traditional LLMs lack transparency in their decision-making processes. When queried, it's often unclear how they arrived at a particular answer. RAG enhances observability by enabling the injection of relevant text snippets from the knowledge base into the LLM's generation process. This transparency enhances trust in the system's outputs and facilitates debugging and improvement efforts.

-

Cost-Effectiveness: Training an LLM from scratch or fine-tuning it with custom data can be prohibitively expensive. RAG offers a more cost-effective alternative by leveraging existing models and augmenting them with external knowledge. This cost-effectiveness makes RAG accessible to a wider range of applications and organizations, democratizing access to advanced AI capabilities.

Implementing RAG Technology

As shown our graphical example above. The question was "who won the FIFA world cup in 2022?" To answer this question we must first scrape the data from the internet.

import requests

from bs4 import BeautifulSoup

from langchain_text_splitters import CharacterTextSplitter

We will use BeautifulSoup for data web data extraction as well as the Langchain text splitter for segmentation

url = "https://olympics.com/en/news/fifa-world-cup-winners-list-champions-record"

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

# Find all the text on the page

text = soup.get_text()

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=100)

docs = text_splitter.split_documents(documents)

Now that our text data is ready, we must embed it.

We can connect to our Pinecone index and insert those chunked docs as contents with PineconeVectorStore.from_documents. This function takes the docs, embeds them and stores them in the Pinecone index.

from langchain_openai import OpenAIEmbeddings

from langchain_pinecone import PineconeVectorStore

embeddings = OpenAIEmbeddings()

index_name = "test-index"

vectordb = PineconeVectorStore.from_documents(docs, embeddings, index_name=index_name)

Now we have done all the necessary steps in the indexing phase. We can start with the retrieval phase. we will achieve this by constructing a retriever that searches for a

query = "who won the FIFA world cup in 2022?"

# retrieval system setup

retriever = vectordb.as_retriever(search_type="similarity", search_kwargs={"k": 2})

qa = ConversationalRetrievalChain.from_llm(OpenAI(temperature=0), retriever)

def execute_conversation(question):

chat_history = []

result = qa({"question": question, "chat_history": chat_history})

chat_history.append(result["answer"])

return result["answer"]

print(execute_conversation(query))

this returns "Argentina was the winner in Fifa 2022 world cup."

Conclusion

In conclusion, RAG represents a fusion of LLMs with Information Retrieval capabilities. By comparing embedded queries with an embedded knowledge base through vector similarity, RAG enhances the accuracy of responses by injecting relevant text snippets into the LLM's generation process. This technology holds significant promise in advancing AI applications, particularly in enhancing the adaptability and precision of question answering systems.